Insights on Secure AI Infrastructure, Confidential Computing, and AI Computing at Scale

Explore the Corvex blog for expert perspectives on confidential computing, secure AI deployment, and innovations in cloud infrastructure. Whether you're building scalable AI models or navigating evolving cloud security standards, our blog delivers the latest strategies and technical deep dives from industry leaders.

Press Release:

Corvex to go Public in All-Stock Merger with Movano, Creating a Pure-Play Platform for Secure AI Infrastructure and High-Performance Inference

News

News

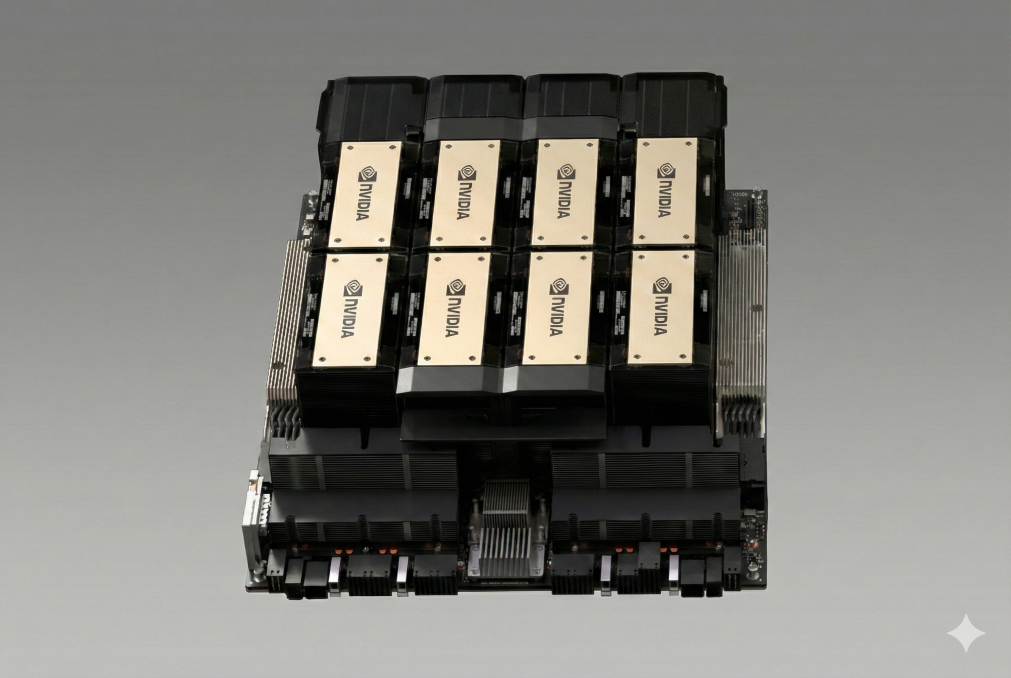

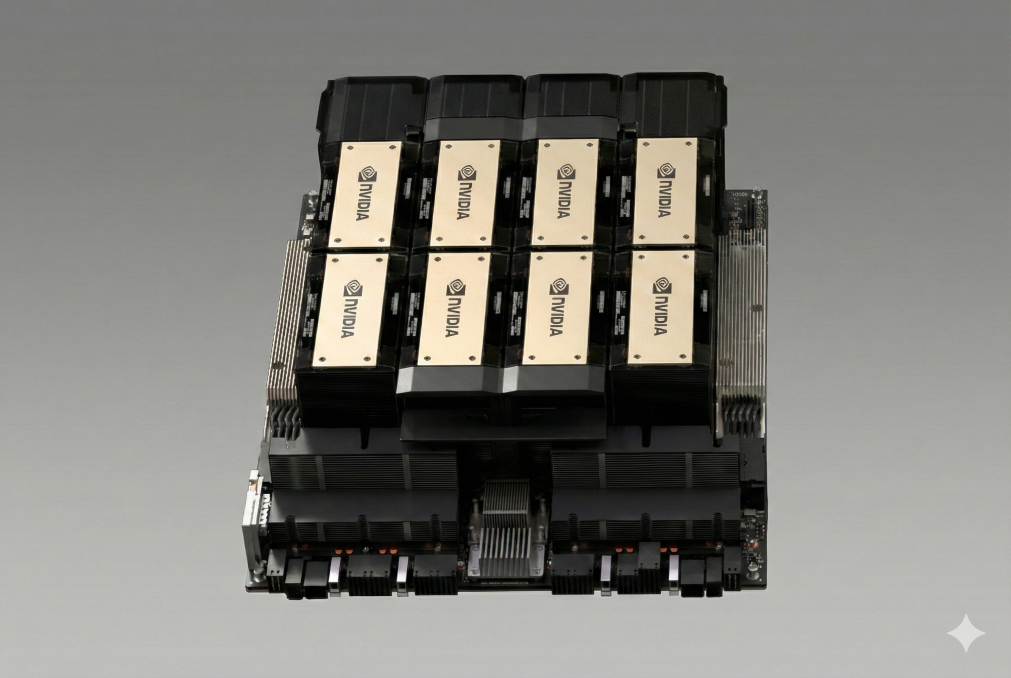

Corvex Secures Long-Term NVIDIA H200 GPU Deployment with AI-driven Provider of High-Performance Battery Technologies to Support Production AI Workloads

News

Corvex Secures Long-Term NVIDIA H200 GPU Deployment with AI-driven Provider of High-Performance Battery Technologies to Support Production AI Workloads

News

Press Release: Corvex to go Public in All-Stock Merger with Movano, Creating a Pure-Play Platform for Secure AI Infrastructure and High-Performance Inference

News

Press Release: Corvex to go Public in All-Stock Merger with Movano, Creating a Pure-Play Platform for Secure AI Infrastructure and High-Performance Inference

News

Interesting Reading: A Guide to GPU Rentals and AI Cloud Performance

In this guest-author piece for The New Stack, Corvex Co-CEO Jay Crystal outlines key factors in ensuring optimal AI Cloud performance.

News

Interesting Reading: A Guide to GPU Rentals and AI Cloud Performance

In this guest-author piece for The New Stack, Corvex Co-CEO Jay Crystal outlines key factors in ensuring optimal AI Cloud performance.

Blog

Blog

Confidential Computing Meets NVIDIA HGX™ B200: Secure AI Without the Performance Trade-Off

Confidential Computing Meets NVIDIA HGX™ B200: Secure AI Without the Performance Trade-Off

Blog

Speed, Predictability, and Value: NVIDIA HGX™ B300 with Corvex

By combining NVIDIA Blackwell Ultra’s architectural advancements with optimized industrial-scale networking, Corvex enable's enterprises to move beyond experimental clusters into predictable, high-utilization production.

Speed, Predictability, and Value: NVIDIA HGX™ B300 with Corvex

By combining NVIDIA Blackwell Ultra’s architectural advancements with optimized industrial-scale networking, Corvex enable's enterprises to move beyond experimental clusters into predictable, high-utilization production.

Blog

Accelerating Our Mission to Build the Future of AI Cloud Computing

A blog by Corvex Co-CEOs Jay Crystal and Seth Demsey on the merger of Corvex and Movano.

Accelerating Our Mission to Build the Future of AI Cloud Computing

A blog by Corvex Co-CEOs Jay Crystal and Seth Demsey on the merger of Corvex and Movano.

Blog

Unlocking Healthcare AI: Solving IT Security Challenges via System Architecture

Unlock the full potential of AI in healthcare by solving the challenge of using PHI securely via system architecture.

Unlocking Healthcare AI: Solving IT Security Challenges via System Architecture

Unlock the full potential of AI in healthcare by solving the challenge of using PHI securely via system architecture.

Blog

Confidential Computing: How to Shield Your IP in Shared Clusters

Confidential Computing: How to Shield Your IP in Shared Clusters

Confidential Computing: How to Shield Your IP in Shared Clusters

Confidential Computing: How to Shield Your IP in Shared Clusters

Blog

Cool New Reality: The Advantage of Liquid Cooling for NVIDIA B200s

When we were designing our Blackwell deployment at Corvex, we faced a choice when it came to cooling: air versus liquid. We chose liquid cooling as the best way to ensure the best reliability in our data center and to keep our fleet of B200s running at peak performance. Here’s how we approached the problem.

Cool New Reality: The Advantage of Liquid Cooling for NVIDIA B200s

When we were designing our Blackwell deployment at Corvex, we faced a choice when it came to cooling: air versus liquid. We chose liquid cooling as the best way to ensure the best reliability in our data center and to keep our fleet of B200s running at peak performance. Here’s how we approached the problem.

Blog

AI Computing for HIPAA Compliance: Maintain Privacy with Confidential Computing

Artificial Intelligence is poised to revolutionize healthcare. But how does the industry maintain strict privacy and regulatory standards with the advent of new AI ecosystems? Corvex Co-CEO Jay Crystal discusses how GPU-based confidential computing enables HIPAA compliance without sacrificing computational efficiency.

AI Computing for HIPAA Compliance: Maintain Privacy with Confidential Computing

Artificial Intelligence is poised to revolutionize healthcare. But how does the industry maintain strict privacy and regulatory standards with the advent of new AI ecosystems? Corvex Co-CEO Jay Crystal discusses how GPU-based confidential computing enables HIPAA compliance without sacrificing computational efficiency.

Blog

NVIDIA B200s: Unlocking Scalable AI Performance with the Corvex AI Cloud

The NVIDIA B200 represents a major step forward in GPU architecture, enabling faster, more efficient training and inference across the most demanding AI workloads—from large language models (LLMs) to diffusion models and multimodal systems. At Corvex, we’ve integrated these new GPUs into an infrastructure purpose-built to remove bottlenecks, maximize security, and support AI developers at scale.

NVIDIA B200s: Unlocking Scalable AI Performance with the Corvex AI Cloud

The NVIDIA B200 represents a major step forward in GPU architecture, enabling faster, more efficient training and inference across the most demanding AI workloads—from large language models (LLMs) to diffusion models and multimodal systems. At Corvex, we’ve integrated these new GPUs into an infrastructure purpose-built to remove bottlenecks, maximize security, and support AI developers at scale.

Blog

What Is Bare Metal—and Why It Matters for AI Infrastructure

When you're pushing the boundaries of AI model training or need rock-solid performance for real-time inference, infrastructure selection and configuration is everything. One option that’s gaining renewed attention in the AI space is bare metal—and for good reason.

What Is Bare Metal—and Why It Matters for AI Infrastructure

When you're pushing the boundaries of AI model training or need rock-solid performance for real-time inference, infrastructure selection and configuration is everything. One option that’s gaining renewed attention in the AI space is bare metal—and for good reason.

.avif)

Blog

Confidential Computing has Become the Backbone of Secure AI

The concept of confidential computing is becoming increasingly important. What does that mean, and why does it matter?

.avif)

Confidential Computing has Become the Backbone of Secure AI

The concept of confidential computing is becoming increasingly important. What does that mean, and why does it matter?

Blog

Enhancing AI Infrastructure with Rail Aligned Architectures

One of the most effective ways to improve the efficiency of AI workloads is through Rail Aligned Architectures (RAAs), a design strategy that enhances data throughput and GPU utilization.

Enhancing AI Infrastructure with Rail Aligned Architectures

One of the most effective ways to improve the efficiency of AI workloads is through Rail Aligned Architectures (RAAs), a design strategy that enhances data throughput and GPU utilization.

Videos

Video

.avif)

AI Computing for HIPAA Compliance

Artificial Intelligence is poised to revolutionize healthcare. But what does AI computing mean for HIPAA compliance?

.avif)

AI Computing for HIPAA Compliance

Artificial Intelligence is poised to revolutionize healthcare. But what does AI computing mean for HIPAA compliance?

Video

The Cool New Reality: Liquid Cooling for NVIDIA B200s

AI infrastructure isn’t just about raw performance anymore—it’s about keeping that performance stable, predictable, and safe under extreme thermal load. This video breaks down the differences between air cooling and liquid cooling and why it makes a big difference for NVIDIA's Blackwell architecture.

The Cool New Reality: Liquid Cooling for NVIDIA B200s

AI infrastructure isn’t just about raw performance anymore—it’s about keeping that performance stable, predictable, and safe under extreme thermal load. This video breaks down the differences between air cooling and liquid cooling and why it makes a big difference for NVIDIA's Blackwell architecture.

Video

Inside the NVIDIA B200: Performance, Cooling, and Real-World Use Cases

In this video, Corvex AI Co-Founder and Co-CEO Seth Demsey breaks down everything you need to know about the powerful NVIDIA B200 GPU, built on the new Blackwell architecture. Designed for AI at scale, the B200 offers massive improvements in memory, compute efficiency, and throughput—making it ideal for large model inference, fine-tuning, and foundation model development.

Inside the NVIDIA B200: Performance, Cooling, and Real-World Use Cases

In this video, Corvex AI Co-Founder and Co-CEO Seth Demsey breaks down everything you need to know about the powerful NVIDIA B200 GPU, built on the new Blackwell architecture. Designed for AI at scale, the B200 offers massive improvements in memory, compute efficiency, and throughput—making it ideal for large model inference, fine-tuning, and foundation model development.

Video

Bare Metal: What It Is and Why It Matters

What is bare metal, and why does it matter for AI training, inference, and cloud performance? Corvex Co-CEO Seth Demsey unpacks the advantages.

Bare Metal: What It Is and Why It Matters

What is bare metal, and why does it matter for AI training, inference, and cloud performance? Corvex Co-CEO Seth Demsey unpacks the advantages.

Video

Confidential Computing: The Backbone of Secure AI Computing

In the era of advanced AI and large-scale data processing, security can no longer be an afterthought. Confidential computing has quietly become one of the most important—but often misunderstood—advances in cloud and data security.

Confidential Computing: The Backbone of Secure AI Computing

In the era of advanced AI and large-scale data processing, security can no longer be an afterthought. Confidential computing has quietly become one of the most important—but often misunderstood—advances in cloud and data security.

Video

Rail-Aligned Architectures in High-performance Computing

What are Rail Aligned Architectures and why do they matter? Corvex Co-CEO Seth Demsey lends insight.

Rail-Aligned Architectures in High-performance Computing

What are Rail Aligned Architectures and why do they matter? Corvex Co-CEO Seth Demsey lends insight.

Video

AI Cloud Performance: Are you getting what you pay for?

The AI revolution is here. For many companies, that means leveraging their ordinary hyperscaler to access powerful GPU resources. These resources can enable game-changing product advancements but come at a significant cost. Ensuring you get the performance you’re paying for requires careful due diligence to get beyond cloud provider marketing hype.

AI Cloud Performance: Are you getting what you pay for?

The AI revolution is here. For many companies, that means leveraging their ordinary hyperscaler to access powerful GPU resources. These resources can enable game-changing product advancements but come at a significant cost. Ensuring you get the performance you’re paying for requires careful due diligence to get beyond cloud provider marketing hype.

Articles

Article

Top HIPAA-Compliant Cloud GPU Providers for Secure AI Model Training

Top HIPAA-Compliant Cloud GPU Providers for Secure AI Model Training

.avif)

Article

Confidential Computing with NVIDIA H200 GPUs: Security and Performance Explained

.avif)

Confidential Computing with NVIDIA H200 GPUs: Security and Performance Explained

Article

GPU Leases 101: Glossary of Key Terms (H200 / B200 Edition)

Plain-English glossary covering host types, memory tiers, on-demand versus reserved capacity, egress, and other key terms you will meet when leasing NVIDIA H200 or B200 GPUs.

GPU Leases 101: Glossary of Key Terms (H200 / B200 Edition)

Plain-English glossary covering host types, memory tiers, on-demand versus reserved capacity, egress, and other key terms you will meet when leasing NVIDIA H200 or B200 GPUs.

Article

Why is the B200 the best for Complex AI Workloads?

Unlike the cost-focused H200, B200 brings higher performance, massive memory, and relentless throughput—ideal for demanding, compute-heavy AI teams.

Why is the B200 the best for Complex AI Workloads?

Unlike the cost-focused H200, B200 brings higher performance, massive memory, and relentless throughput—ideal for demanding, compute-heavy AI teams.

.avif)

Article

Comparison: Corvex vs Azure: The Right Choice for AI-Native Infrastructure

Corvex outperforms Azure for AI infrastructure with H200/B200/GB200 GPUs, flat pricing, and faster LLM performance—built for modern AI teams.

.avif)

Comparison: Corvex vs Azure: The Right Choice for AI-Native Infrastructure

Corvex outperforms Azure for AI infrastructure with H200/B200/GB200 GPUs, flat pricing, and faster LLM performance—built for modern AI teams.

Article

Serving LLMs Without Breaking the Bank

Run your model on an engine that keeps GPUs > 80 % busy (vLLM, Hugging Face TGI, or TensorRT-LLM), use 8- or 4-bit quantisation, batch and cache aggressively, and choose hardware with plenty of fast HBM and high-bandwidth networking. Corvex’s AI-native cloud pairs H200, B200, and soon GB200 NVL72 nodes with non-blocking InfiniBand and usage-based pricing (H200 from $2.15 hr) so you only pay for the compute you keep busy. corvex.aicorvex.ai

Serving LLMs Without Breaking the Bank

Run your model on an engine that keeps GPUs > 80 % busy (vLLM, Hugging Face TGI, or TensorRT-LLM), use 8- or 4-bit quantisation, batch and cache aggressively, and choose hardware with plenty of fast HBM and high-bandwidth networking. Corvex’s AI-native cloud pairs H200, B200, and soon GB200 NVL72 nodes with non-blocking InfiniBand and usage-based pricing (H200 from $2.15 hr) so you only pay for the compute you keep busy. corvex.aicorvex.ai

Article

What is the true cost of training LLMs? (And how to reduce it!)

The cost of training large language models (LLMs) isn’t just about how much you pay per GPU-hour. The real cost includes hardware performance, infrastructure efficiency, network design, and support reliability. This guide breaks down what actually impacts the total cost of training and how to reduce it without sacrificing performance.

What is the true cost of training LLMs? (And how to reduce it!)

The cost of training large language models (LLMs) isn’t just about how much you pay per GPU-hour. The real cost includes hardware performance, infrastructure efficiency, network design, and support reliability. This guide breaks down what actually impacts the total cost of training and how to reduce it without sacrificing performance.

Article

GPU Cloud vs Hyperscaler: Which AI Infrastructure Is Right for You?

AI developers and enterprises have more options than ever for compute infrastructure. You can go with traditional hyperscalers like AWS, Google Cloud, and Azure—or you can choose an AI-native GPU cloud built specifically for large-scale model training and inference. This guide breaks down the key differences to help you choose the right path.

GPU Cloud vs Hyperscaler: Which AI Infrastructure Is Right for You?

AI developers and enterprises have more options than ever for compute infrastructure. You can go with traditional hyperscalers like AWS, Google Cloud, and Azure—or you can choose an AI-native GPU cloud built specifically for large-scale model training and inference. This guide breaks down the key differences to help you choose the right path.

Make Your Innovation Happen

with Corvex

Amplify your AI performance and security.

.png)

-p-500%201.png)

.svg)

.svg)